Nils T Siebel

People Tracking for Visual Surveillance

This page describes details of my system to track people in camera images,

the Reading People Tracker.

1 Introduction

In a research project at University of Reading I started doing research on

People Tracking in camera images for automatic visual surveillance systems.

The research was carried out within the European Framework V project

ADVISOR in which we have developed an integrated visual surveillance and behaviour analysis system.

The People Tracker developed within the project is based on the

Leeds People Tracker which was developed by

Adam Baumberg.

Contents of this page

2 Main Results

- The Reading People Tracker is a new modular system for tracking people and other

objects in video sequences. The main contribution is a powerful and scalable structure which has been achieved by a complete re-design and much refactoring of the underlying Leeds People Tracker, and the addition of new functionality, resulting in

- high robustness of the tracker to image noise and occlusion through the use of redundant, different type trackers running in parallel, and the use of multiple tracking hypotheses (see ECCV 2002 paper or my PhD thesis for details).

- the ability to track multiple objects in an arbitrary number of cameras, writing out tracking results in XML format.

- scalability and much improved maintainability of the people tracker (see ICSM 2002 paper for details).

- new documentation for maintaining the People Tracker, including a well-defined and documented software maintenance process (see JSME/SMR article for a case study on processes).

- Validation of the new tracking system to track people and vehicles (see PETS 2001 paper for the validation of an earlier version of the tracker).

- Integration of the tracker as a subsystem of ADVISOR, interfacing with other subsystems through Ethernet.

- Examination of whether and to what extend colour filtering methods can help to improve motion detection and local edge search.

- The tracker has been ported from an SGI platform to a PC running GNU/Linux to make economic system integration feasible. Also, the source code now adheres closely to the ISO/IEC 14882-1998 C++ standard, as well as IEEE POSIX 1003.1c-1995 extensions for multi-threading, making it easily portable. While the code is being maintained under GNU/Linux, it also compiled under Windows XP/2000 a while ago.

3 Tracking Algorithm

3.1 Overview of the 4 Detection / Tracking Modules

Module 1 - Motion Detector

A Motion Detector detects moving pixels in the image. It models the

background as an image with no people in it. Simply subtracting it pixelwise

from of the current video image and thresholding the result yields the binary

Motion Image. Regions (bounding boxes) with detected moving blobs

are then extracted and written out as the output from this module.

Main features:

- simple background image subtraction

- image filtering (spatial median filter, dilation) depending on available CPU time

- temporal inclusion of static objects into the background

- background modelling using a speed-optimised median filter

- static regions incorporated into background (multi-layer background).

Example:

This example shows how pixelwise differencing of video and background images leads to the difference image which is then thresholded to give the binary motion image. The example also shows how a low contrast of an area against the background (in this case the white coat against the light yellow background) results in non-detection of that area in the motion image.

Module 2 - Region Tracker

A Region Tracker tracks these moving regions (i.e. bounding boxes) over time.

This includes region splitting and merging using predictions from the previous frame.

Main features:

- region splitting and merging using predictions

- adjust bounding box from Active Shape Tracker results

- identify static regions for background integration.

Example:

Module 3 - Head Detector

A Head Detector makes rapid guesses of head positions in all detected

moving regions.

Main features:

- works in binary motion image

- looks for peaks in detected moving regions

- vertical pixel histogram with low-pass filter

- optimised for speed not accuracy

Example:

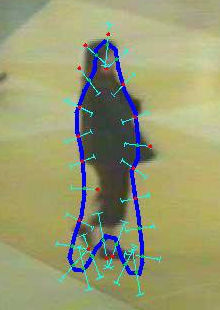

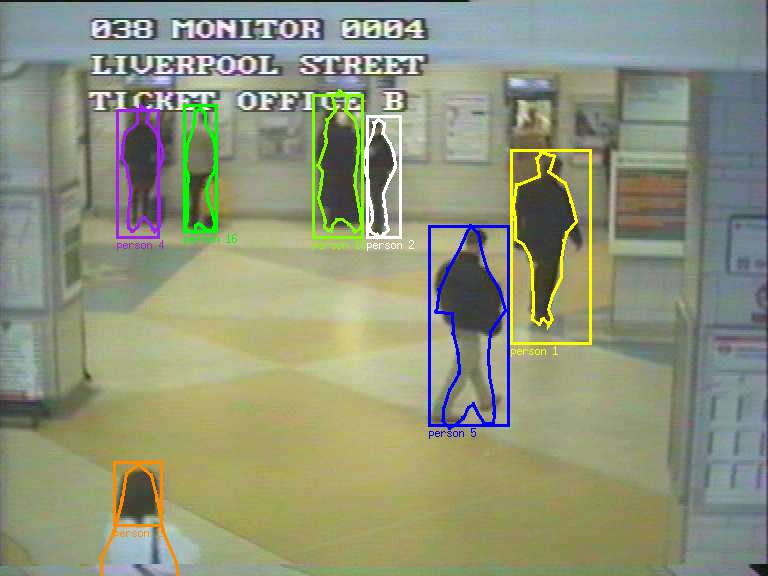

Module 4 - Active Shape Tracker

An Active Shape Tracker uses a deformable model for the 2D outline shape

of a walking pedestrian to detect and track people. The initialisation of

contour shapes (called profiles) is done from the output by the Region

Tracker and the Head Detector.

Main features:

- local edge search for shape fitting

- initialisation of shapes from Region Tracker, Head Detector and own predictions

- occlusion reasoning

Example:

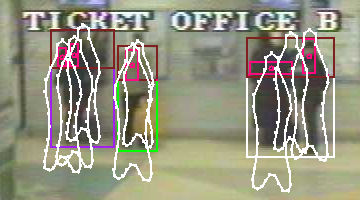

The left image shows how the output from the Region Tracker (purple, green and

white boxes) and estimated head positions detected in them from the Head

Detector (drawn in red/pink) are used to provide initial hypotheses for

profiles (outline shapes, drawn in white) to be tracked by the Active Shape

Tracker. Each such hypothesis, as well as the Active Shape Tracker's own

predictions from the previous frame, is then examined by the Active Shape

Tracker using image measurements. The right image details how a local search

for edges around the contour is used in an iterative optimisation loop for

shape fitting.

Combining the Modules

The main goal of using more than one tracking module is to make up for

deficiencies in the individual modules, thus achieving a better overall

tracking performance than each single module could provide. Of course, when

combining the information from different models it is important to be aware of

the main sources of error for the different modules. If two modules are

subject to the same type of error then there is little benefit in combining the

outputs. The new People Tracker has been designed keeping this aspect in mind,

and using the redundancy introduced by the multiplicity of modules in an

optimal manner.

These are the main features of the system:

- interaction between modules to avoid non- or mis-detection

- independent prediction in the two tracking modules, for greater

robustness

- multiple hypotheses during tracking to recover from lost or mixed-up

tracks

- all modules have camera calibration data available for their use

- through the use of software engineering principles for the software

design, it is scalable and extensible (new modules...) as well as highly

maintainable and portable.

3.2 Details of the Tracking Algorithm

The complete people tracking algorithm is given below.

3.3 Example Image of Tracked People

The following image shows the output from the People Tracker after hypothesis

refinement. Tracked regions (bounding boxes) and profiles (outline shapes) are

shown in the image.

4 Software Engineering Aspects

The People Tracker was completely re-engineered, yielding a new design. Now, the software is highly maintainable and portable, and a software process for all maintenence work is well defined and documented. Extensibility and scalability were kept in mind while designing the new tracker.

The source code adheres closely to the ISO/IEC 14882-1998 C++ standard, as well as IEEE POSIX 1003.1c-1995 extensions for multi-threading, making it easily portable. While the code is being maintained under GNU/Linux, it also compiles under Windows 2000.

Members of The University of Reading's

Applied Software Engineering group and I have examined the

software processes in our own work on the People Tracker and the way these have influenced the

maintainability of the code (see JSME/SMR paper). The re-engineering phase and its effect on the maintainability of The Code was also more closely examined in a case study (see ICSM 2002 paper).

5 Special Features of our System

- next-to-market, integrated product

- combination of multiple algorithms for robustness

- redundancy helps offset bad image quality

- scalable, extensible design

- highly maintainable and portable implementation

- other trackers (e.g. W4 from UMD) achieve similar tracking performance by different means.

6 Relevant Publications

7 Acknowledgements

- This work was supported by the European Union, grant ADVISOR (IST-1999-11287)

- Thanks to Sergio Velastin (Kingston University) and to London Underground Ltd. for providing us with video sequences.

8 Source Code of the Reading People Tracker

Author of these pages:

Nils T Siebel.

Last modified on Wed May 26 2010.

This page and all files in these subdirectories are Copyright © 2004-2010 Nils T Siebel, Berlin, Germany.

-

-

=

=